Automatic determination of the heading text

Introduction

In previous articles dedicated to the organization of data in categories (Using count as the basis to create categories and the Problems that await any maker categories) described General ideas for organizing the categories. In this article I will describe one of the possible algorithms for automatically determining the subject matter of the text based on pre-prepared graph of categories. However, I deliberately avoid complicated formulas to convey the idea underlying the algorithm as simple as possible.

Preparation of the data categories

For starters, let define in what form we will prepare the data for the categories.

the

- 2. The text, which is determined, can be assigned to multiple categories at the same time

- 4. The theme of the text is determined for each text separately, and does not depend on the defined rubrics of other texts of earlier

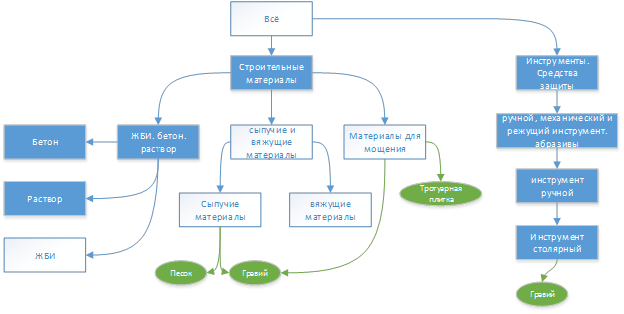

1. Categories is a graph, not a tree the

3. For each map category specify the factor of accuracy in determining headings the

The last point needs some explanation. Independence determination the subject of the text is very good, when you do not require subsequent sorting of results. When the texts are just related to rubric or not. But if the topic of several texts, will surely need to sort them according to the criterion of best hit in the category. In this article, this question is omitted for clarity.

the algorithm for determining the subject matter of the text, briefly

The described categories. Extract from the studied text keywords described in the categories. The extraction process we get pieces of the broken and often incoherent graph. Use wave (or any other, optional) the algorithm to "dotyagivaya" extracted pieces of the graph to the top of "everything". Analyze and output the results.

the algorithm for determining the subject matter of the text, in detail

The creation of categories was described in detail in previous articles. At this stage we consider that we have lined graph categories, in a sheet whose elements are keywords, and nodal-headings.

We search the entire set of key words for the rubricator in the studied text. So as words, you want to find can be a lot to recommend specific implementation to apply to the fast search algorithms of arrays of strings in the text. For the seed I provide links to the most popular ones:

Algorithm Aho — Corasick

The algorithm is characterized by a rather complex logic of tree building and handling "returns", there are many examples of implementation. habré was a good description of this algorithm.

Algorithm Rabin — Karp. the Distinctive feature of the algorithm is an efficient work with very large sets of words.

Basically, after reading describe the two proposed algorithms, it is not difficult to come up with them on the basis of its implementation, is able to take into account some additional conditions. For example, the requirement of case sensitivity, morphology, etc.

Once out of his text extracted keywords used in the description of the categories, you can proceed directly to the definition of the subject of the text.

Wave

The subject is top of all. As there are found in the text key words. The purpose of this stage is to find a path from each of the found keywords to the top of the "all".

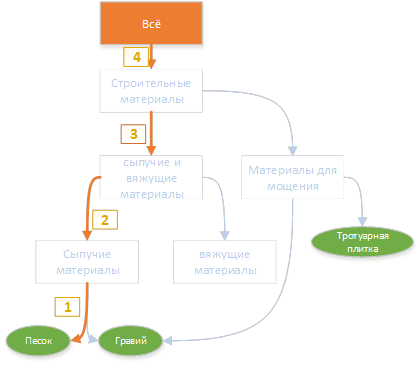

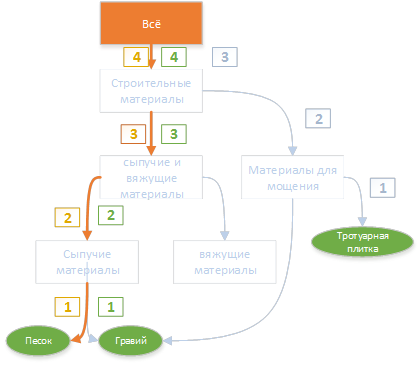

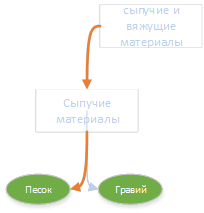

For the keyword "sand", the path to the root of the subject heading list will look as follows:

Similarly built the way to the top at all, found in the previous step.

The result of such a construction for each keyword is the way to the top.

definition of the theme

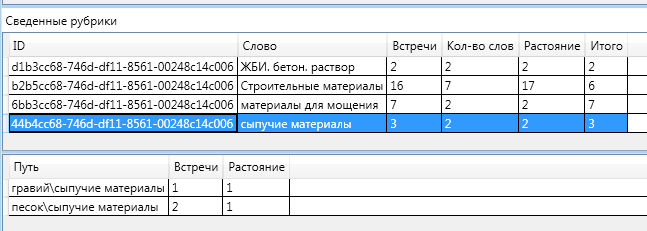

Let us now consider how it was produced automatically detect the headings in the example of article: "How to lay paving tiles?". People not even reading the article might assume that it would be about the possible ways of laying tiles and related materials and technologies.

Check how work auto detect headings for this article.

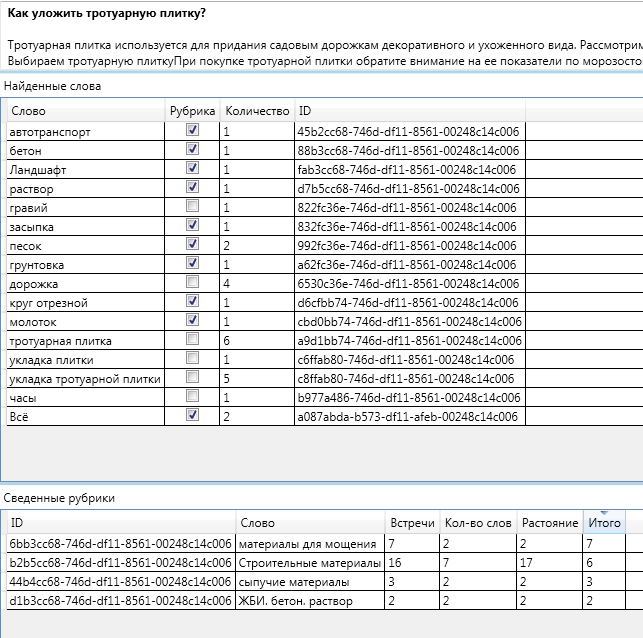

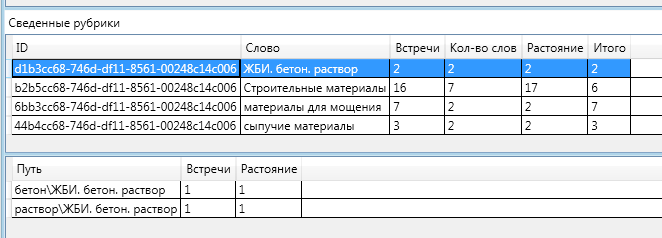

Let's start traditionally with the keywords found in the article and having a display in the categories.

In the figure, the mark "topic" means that the item categories is not sheet. This allows you to "combine" the name of a rubric and describe its key words.

The most popular word in the article "How to lay paving tiles?" is a "paving stone" that was to be expected.

Lower part of the subject that was involved in the example:

For example, it is shown that the keyword "gravel" is classified under two headings, and the key word is "paving" to only one. In the same light background of the selected columns, whose names in the text do not occur. (Remember that a keyword and a category can have the same name. Details in previous articles).

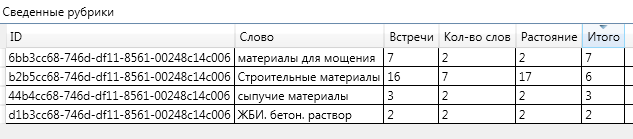

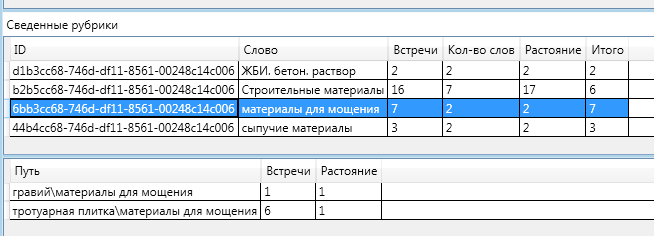

Now look at the headings, to which was referred the article:

The first category, which includes this article is the heading "pavement".

In the drawing, the selected line is a section with results and below the dataplate for each word.

The final grade belonging to this category – 7. This is the highest rating. From this column in the text was found 2 words, 7 times for a total distance of 2. Recall that the distance between two vertices in a graph is the number of edges in the shortest path.

Namely, the text was found the keyword "gravel" — once. And the key word is "paving" 6 times. Both words are a child of the heading "materials for paving".

Total: (Gravel 1 word) + (Paving slabs 6 words) + Met 2 — (1 (Distance from the word to the Gravel "pavement") +

1 (the Distance from the word Paving slabs to "the pavement"))

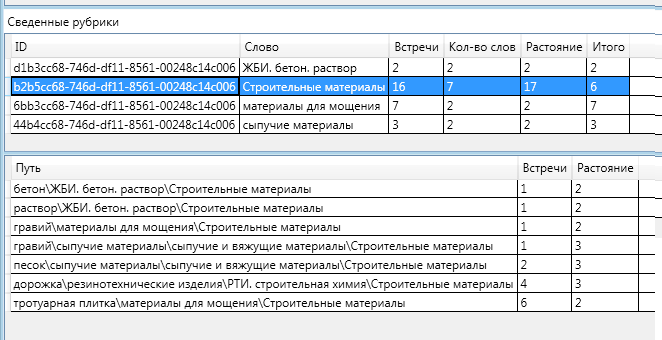

For completeness, here the schema information of the remaining headings:

It is interesting to note the fact that "on the road" to "Building materials" were two categories: "bulk materials" and "bulk and binding materials". One of them came in the final determination, and the other is not.

Sand and gravel together can be attributed to the category "Bulk material". "Bulk material" can be attributed to "Granular and astringent materials". The distance to more General (upper) heading more than the distance to more specific (lower) then the calculation formula of the relevance of the "total" is very small (down to negative.

Formula

The formula for determining the column "total" in the table as follows:

Total = "Date" + "number of words" — "Distance"

Meetings – how many times was keywords are found.

Word count – How many different words were found.

Distance – the total distance from each word to the selected category.

And this formula is not a fundamental postulate. And probably can be improved, equipped with additional elements, etc. (Suggest to discuss this in the comments).

Looking forward, I want to note that in developing this system, we specifically tried to avoid any explicit odds, in order not to get lost in the intricacies of fine tuning.

growth

At its core categories cannot be directly compiled uniformly. Certainly, a separate section will be more elaborate than others. This can lead to "bias" the count and the increasing distance from the "leaves in the top of the" in one part and a significant decrease in the other. This imbalance can have a negative impact on the correct calculation of distances in the graph. Here you can try to take into account the number of child elements at every node in the graph.

Another interesting idea on optimization of the process of determining the subject of the text is the idea of selective determination of subjects for the different sections of the text with the subsequent composition of the results.

The idea is based on the fact that, as a rule at the beginning of any article there is a section "introduction" abundant variety of keywords, in the following sections is the clarification of the idea of the article, that allows us to classify each individual head more reliably.

do it

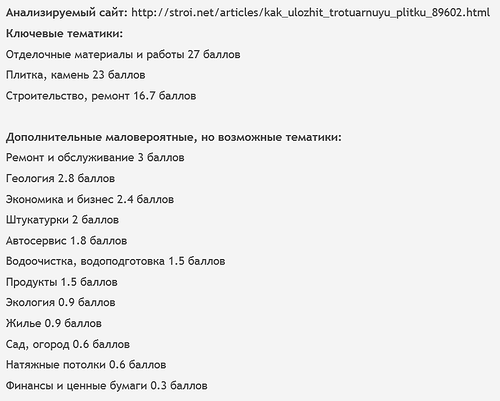

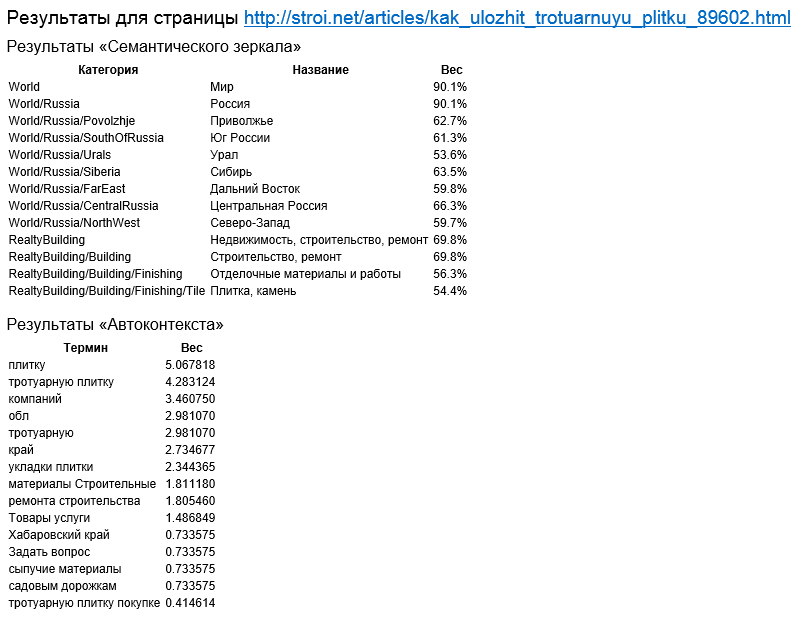

It is interesting to see the same example in other online services the definition of the subject matter.

Website http://www.linkfeedator.ru/index.php?task=tematika :

Website: http://sm.ashmanov.com/demo/

Conclusions

The topic of auto-categorization-categorization of texts is very relevant to search engines and processing systems and knowledge discovery. I hope that this article will be useful not only to those who are just starting to explore the technology of automatic classification, but also for those who ate not one dog.

Thanks

I Express my appreciation to those who have read the first two articles and offered feedback, as well as personally Andrei Bengkulu for assistance in obtaining a beautiful table numbers.

Комментарии

Отправить комментарий