Creating Shazam in Java

A couple of weeks ago, I came across this article How Shazam Works

I was wondering, how do you operate programs such as Shazam... more importantly, how hard it is to write something similar in Java?

Shazam

If someone doesn't know, Shazam is an app with which you can analyze/choose the music. Install it on your phone, and holding the microphone to any music source for 20-30 seconds, the app will detect that is this song.

On first use I had a magical feeling. "How it's done!?" And even today, when I have used them many times, this feeling does not leave me.

Wouldn't it be cool if we could write something ourselves that would cause the same feelings? It was my goal this past weekend.

Listen closely..!

First of all, before you take a sample of the music for analysis, we need to listen to the microphone via our Java application...! This is what I at that time have not had to implement in Java, so I imagined how difficult it would be.

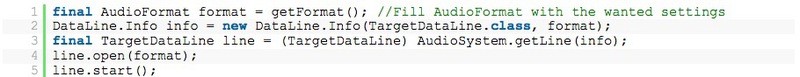

But it turns out it's pretty simple:

Now we can read data from TargetDataLine as with normal streams InputStream:

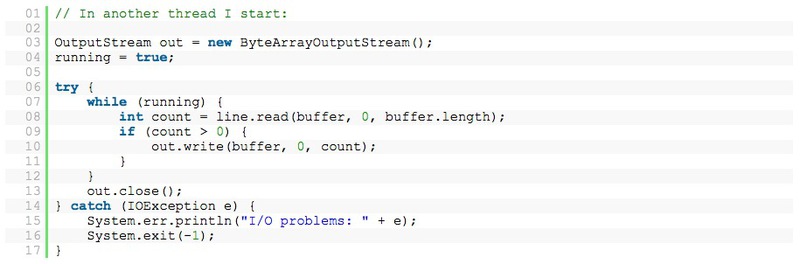

This method makes it easy to access microphone and record all the sounds! In this case I use the following AudioFormat:

So, now we have recorded the data in the ByteArrayOutputStream class, great! The first stage is passed.

Data from the microphone

The next challenge is the analysis of the data output, the data were in the form of a byte array, it was a long list of numbers like this:

Hmmm... Yes. And that's the sound?

To find out whether the data can be visualized, I threw them into Open Office and converted into a line graph:

Yes! Now it looks like "sound". Looks as if you were using Windows Sound Recorder.

This type of data is known as time domain (time domain). But at this stage these numbers are useless to us... if you read the above article about how Shazam works, then you know that they use spectrum analysis (spectral analysis) data instead of continuous time domain.

So the next important question is: "How do we transform our data in the form of spectral analysis?"

Discrete Fourier transform

To turn our data into something usable, we need to apply the so-called Discrete Fourier Transformation (Discrete Fourier Transform). That will allow us to transform our data from time domain into frequency intervals.

But this is only one problem, if you transform the data into frequency intervals, every bit of information regarding the temporary data will be lost. And so, you know the magnitude of each stroke, but you won't have the slightest idea when it should happen.

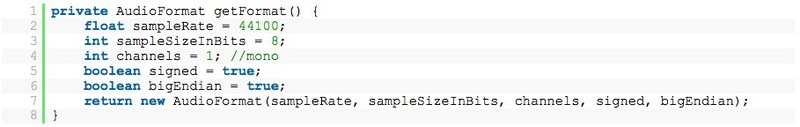

To solve this problem, we use sliding window protocols. Taking part of the data (in my case 4096 bytes of data) and convert only this bit of information. Then we know the magnitude of all fluctuations occurring within those 4096 bytes.

Usage

Instead of thinking about the Fourier Transform, I googled a bit and found code for the so-called FFT (Fast Fourier Transform). I call this code – the code that packs data:

Now we have two series packs all data – Complex[]. These series contain data for all frequencies. To visualize the data I decided to use the entire spectrum analyzer (just to make sure that my calculations are correct).

Input, Aphex Twin

This is a little off topic, but I'd like to tell you about electro musician Aphex Twin (Richard David James). He wrote crazy electronic music... but some of his songs have interesting characteristics. For example, his greatest hit Windowlicker, it bears the image of the spectrogram.

If you look at the song as on the spectral image, you'll see a beautiful spiral. Another song — the Mathematical Equation that shows the face and Twin'! Learn more here — Bastwood – Aphex Twin's face.

Run this song through my spectrum analyzer I got the following results:

Certainly not perfect, but it should be a person Twin'!

Defining key musical points

The next step in the algorithm of the app Shazam to identify some of the key points in the song, save them in the form of signs and then try to find the right song from his more than 8 million database of songs. This happens very quickly, search of sign occurs at a rate of O(1). Now it becomes clear why Shazam works so great!

As I wanted me to have it work after a weekend (unfortunately this is the maximum amount of time for me to concentrate on one thing, then I need to find a new project) I have tried to simplify my algorithm as possible. And to my surprise it worked.

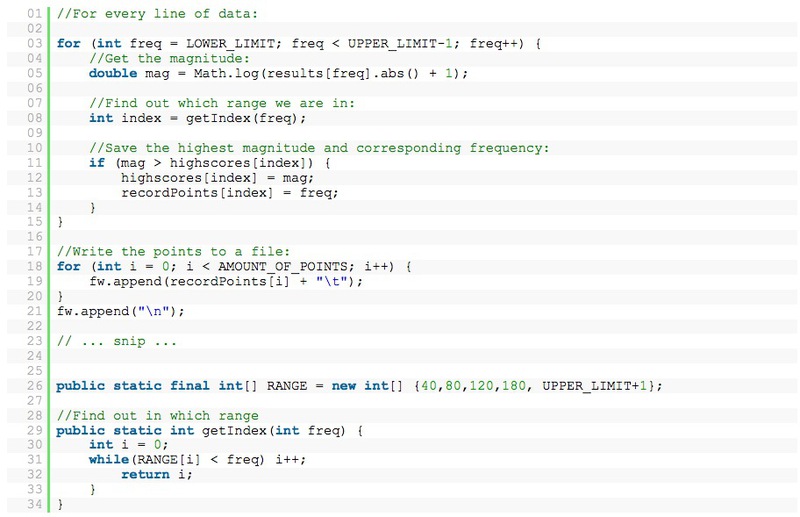

For each line in the spectral analysis, within a certain range I highlighted the point with the highest magnitude. In my case: 40-80, 80-120, 120-180, 180-300.

Now, when we record a song, we get a list of numbers, like this:

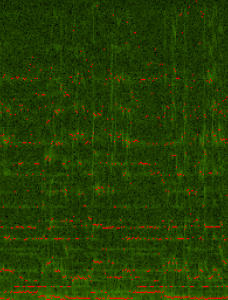

If I record a song and visualize it, it will look something like this:

(all the red dots, the "key points")

Indexing my own music

Having a working algorithm, I decided to index all my 3000 songs. Instead of a MIC you can just open mp3 files, convert them to the format you want, and consider them in the same way as when using a microphone, using an AudioInputStream. Convert stereo recordings to mono mode was harder than I thought. Examples can be found online (requires a bit more coding to post here) will have to slightly change the examples.

Process of selection

The most important part of the application – selection process. From the Shazam it is clear that they use the marks to find a match and then decide which song comes the best.

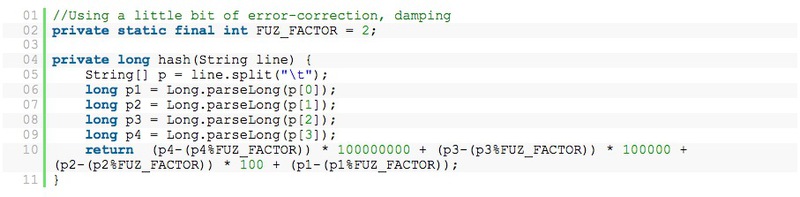

Instead of using intricate temporary groupings of points, I decided the row of our data (e.g., 33, 47, 94, 137) as a single sign: 1370944733

(in the process of testing, 3 or 4 points is the best option, more difficult with fine tuning, every time you have to reminderservice my mp3!)

An example of encoding characters, using 4 points per line:

Now I will create two arrays of data:

The list of songs, List (where the List index is Song-ID, String is songname)

Database characters: Map<Long, List>

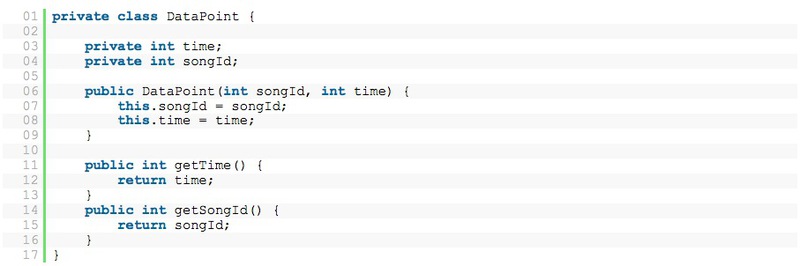

Long in the database of signs is itself a sign and includes a segment of the DataPoints.

DataPoints are as follows:

Now we have everything you need to start the search. At first I thought all the songs and generated the labels for each data point. It will be included in the database of signs.

The second step is to read data of a song we want to identify. These signs are extracted, and matching with the database.

This is only one problem, for each sign there are several hits, but how do we determine which song is the same..? The number of matches? No, that's not good.

By studying the data, I found something interesting as we have the following data:

Signs

— Matching marks of the probable options

— ID songs of the probable options

— The timeline in our own records

— Time marks the probable options

Now we can sminusovat the current time in our records (for example, line 34) with a time mark (e.g., line 1352). This difference is stored together with the ID of the song. Since this contrast, this difference tells us where we can be in the song.

After we're done with characters from our records we will stay with a bunch of ID songs and contrasts. The point is that if you have a lot of characters from the selected contrasts, then you have found your song.

Results

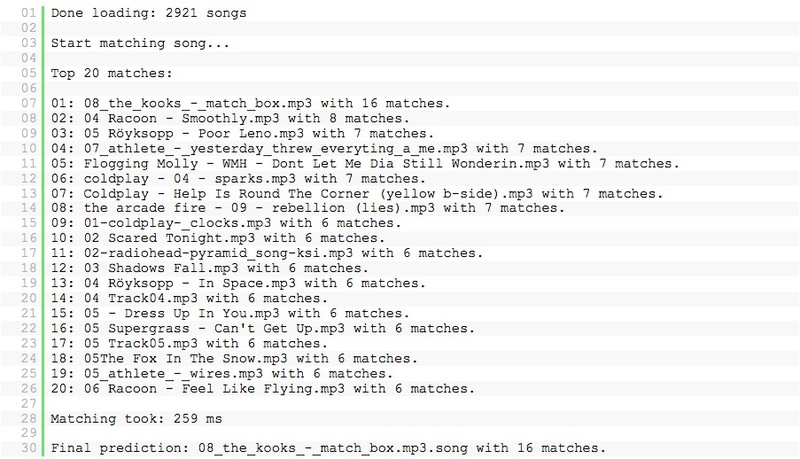

For example, listening to The Kooks – Match Box the first 20 seconds, we get the following data in my application:

Works!!!

After listening to 20 seconds it can identify almost all the songs I have. And even this live recording of the Editors, you can define after 40 seconds of listening!

And again, that feeling of magic!

Currently, the code is not completed and it works perfectly. I concocted it one weekend, it's more like a proof of the theory/study of the algorithm.

Maybe if enough people asked, I will bring it to mind, and somewhere to lay out.

Addition

The lawyers of the holders of patent rights to Shazam send me emails with a request to discontinue code and delete this topic, you can read about this here.

translator

a Little announcement: in the summer of 2013 opens the Yandex Tolstoy Summer Camp— an experimental workshop for those who want to learn how to create and run startups. Within a two-month course, Yandex will help the participants to gather a worthy team to correctly formulate the concept, to obtain feedback from the proper experts and to develop a prototype of the project. A range of seminars and workshops will allow you to upgrade the essential skeels. Also, we will regularly publish interesting translated articles to start about the subject. If you come across something interesting, throw in PM! KST, article on habré as Yandex learned to identify music.

Apply now (2 days left!!) and find more information here. And in the announcement on Habra. I'm going to talk about the Lean methods, Customer Development and MVP. Come!

Комментарии

Отправить комментарий